The Multi-Dimensional Reality of AI Sovereignty

How orchestration-layer control redefines what it means to build sovereign AI infrastructure for global enterprises.

The Sovereignty Trap: When Compliance Doesn't Equal Control

This is a dilemma faced by any CTO within the management of enterprise AI: Your lawyers tell you that your AI infrastructure is good to go according to data laws in your jurisdiction. Data stays in the jurisdiction it needs to. Your contracts with vendors promise to live somewhere. However, when a key model API increases its pricing by 200%, eliminates a feature your product needs to function, or just goes down during peak hours, you suddenly realize an uncomfortable reality: yes you’re compliant but no you’re not in control.

This divide between actual operational control and compliance is referred to as the sovereignty trap. While businesses around the world race to embrace generative AI at scale by 2025, most organizations are finding that data residency alone does not shield them from risks they care about: vendor lock-in, unpredictable cost exposure, strategic dependencies and being unable to adjust when market dynamics change.

GLBNXT is a global AI platform, providing decoupled infrastructure, orchestration and deployment of AI agents. It delivers the comprehensive controls companies need to build AI systems they genuinely possess while addressing data sovereignty considerations, regardless of where they are operating. It’s not that businesses don’t understand sovereignty; it’s just the way we think about AI sovereignty now is kind of broken.

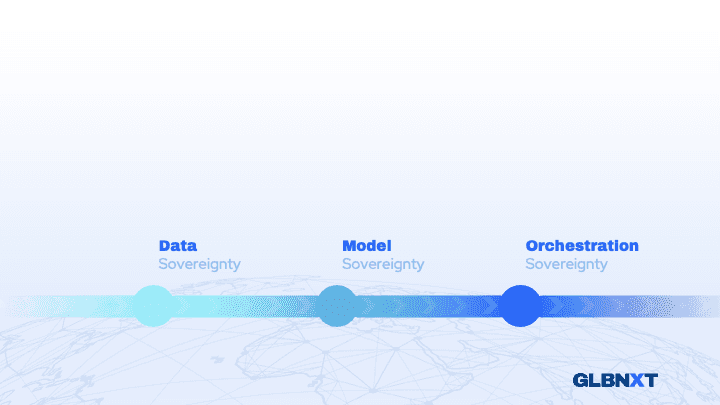

The Evolution: From Data Sovereignty to Architectural Independence

According to Gartner's 2026 CIO Survey, 50% of CIOs and technology executives outside the US now anticipate changes in vendor engagement due to regional sovereignty factors, a trend Gartner warns could reshape "hegemony" in the global technology market over the coming years. This shift isn't limited to any single region: it represents a fundamental reassessment of what sovereignty means in the age of AI.

First Wave: Data Sovereignty (2016-2020)

When data privacy regulations emerged globally—GDPR in Europe, CCPA in California, PIPEDA in Canada, LGPD in Brazil—the industry's answer was simple: keep data in the required jurisdiction. Hyperscalers opened regional data centers. SaaS vendors added local presence. The compliance checkbox was easy to tick.

But data sovereignty solved only one dimension of control. While your data remained in designated regions, the code that processed it, the models that analyzed it, and the APIs that orchestrated it remained in the hands of vendors whose priorities might not align with yours.

The hidden dependencies emerged quickly:

Training data for foundation models came from limited geographic sources with embedded biases

API access policies changed overnight at vendor discretion

Model deprecation cycles didn't align with enterprise product lifecycles

Pricing structures favored certain markets over others

Critical features appeared in select regions first, leaving others behind

Data sovereignty was necessary but insufficient. Enterprises needed the next layer.

Second Wave: Model Sovereignty (2022-2025)

As LLMs became mission-critical, sophisticated organizations worldwide realized they needed control over the models themselves. The solution appeared obvious: self-host open source models.

Global enterprises invested heavily:

Deploying Llama, Mistral, and Falcon on private infrastructure

Building MLOps pipelines for training and fine-tuning

Hiring specialized ML engineering teams

Acquiring GPU capacity in regional data centers

But self-hosting introduced new complexity:

Operational burden: Models require continuous monitoring, updates, and optimization

Integration fragmentation: Each model needs custom API implementations

Performance gaps: Open source models lagged commercial alternatives for certain tasks

Cost unpredictability: GPU utilization patterns proved difficult to forecast

Expertise bottlenecks: Few teams had the depth to manage models at scale

Organizations achieved model independence but lost velocity. Self-hosting created a new trap: freedom through complexity.

Third Wave: Orchestration Sovereignty (2025-Present)

And the breakthrough coming out of production deployments at scale: Sovereignty is not about owning all the components, it’s about controlling the orchestration layer. Think about modern infrastructure architecture. Nobody cares if your orchestration software (Kubernetes) is proprietary, they just care that they get to decide how it schedules workloads, allocates resources, integrates services. The theory carries over to AI as well.

Orchestration-layer sovereignty means:

You decide which models handle which workloads (not your vendor)

You control routing logic, fallback strategies, and cost optimization rules

You integrate 100+ models through a single, unified interface you own

You can switch providers, add models, or change strategies without rewriting code

Your AI agents execute on infrastructure you control or can audit completely

This is the architecture GLBNXT provides: a Kubernetes and Rancher-powered orchestration layer that integrates 100+ LLMs, multiple applications, and open source tooling, deployable on GLBNXT infrastructure (including regional-based GPU clusters for organisations requiring regional data residency) or on your own infrastructure anywhere in the world, with full transparency.

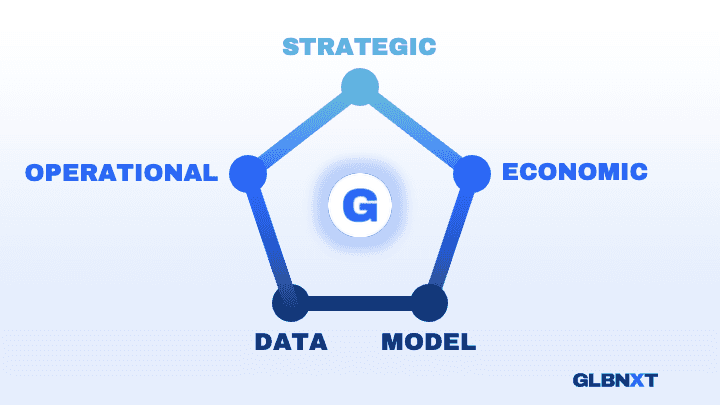

The Five Dimensions of True AI Sovereignty

Modern AI sovereignty operates across five interconnected dimensions. Most vendors solve one or two. Complete architectural independence requires addressing all five.

Data Sovereignty: The Foundation Layer

What it is: Geographic and operational control over data storage, processing, and transmission aligned with your regulatory and business requirements.

Why it matters: Whether you're navigating GDPR in Europe, CCPA in California, industry-specific regulations like HIPAA or SOX, or simply want to maintain control over sensitive business data data sovereignty is non-negotiable for enterprise AI.

GLBNXT approach: Flexible deployment options that support your data residency requirements. Deploy on GLBNXT-owned infrastructure (including regional data centers for organisations requiring regional residency), or run the entire stack on your own infrastructure ,whether that's in North America, APAC, Europe, or your private data center.

The architecture supports data sovereignty requirements across jurisdictions: GDPR-compliant operations in Europe, CCPA-aligned deployments in California, or custom residency requirements for regulated industries anywhere.

But this is just the foundation. Data residency alone doesn't protect you from vendor dependency, cost volatility, or strategic lock-in.

Model Sovereignty: Independence from Vendor Lifecycles

What it is: Freedom from dependency on any single model provider's pricing, availability, or deprecation decisions.

Why it matters: When GPT-4 rate limits your application, when Claude changes API semantics, when a model you depend on is discontinued, single-vendor dependency becomes an existential product risk. This concern is universal, regardless of where your organization operates. The traditional dilemma:

• Use commercial APIs: Fast deployment, but total vendor dependency

• Self-host open source: Independence, but operational complexity

GLBNXT approach: Integration with 100+ LLMs (OpenAI, Anthropic, Cohere, Mistral, Llama, Falcon, DeepSeek, Qwen, and more) through a single orchestration interface. Your application code remains stable. The underlying model can change dynamically based on your rules, cost optimisation, performance requirements, or availability. You write against the orchestration API once. The models beneath can evolve without breaking your code. This is model sovereignty without operational burden.

Operational Sovereignty: Control Over the Orchestration Logic

What it is: The ability to define, audit, and modify how AI workloads are routed, executed, and monitored within your infrastructure.

Why it matters: This is where vendor lock-in actually happens, not at the data layer, but at the orchestration layer. If your vendor controls the routing logic, the fallback strategies, and the integration patterns, you don't control your AI stack. You're renting their architecture. What true operational sovereignty looks like:

• Transparent routing logic you can inspect and modify

• Customizable fallback strategies when primary models fail

• Cost optimization rules you define (not what your vendor decides is "optimal")

• Full observability into model selection, latency, token usage, and errors

• The ability to A/B test models in production under your control

GLBNXT approach: Built on open standards (Kubernetes, Rancher) with open source orchestration tools (n8n, OpenWebUI, Postgress, S3, Supabase, Ollama and many more). You define the orchestration rules. You control model routing. You own the integration logic. The platform provides the infrastructure, but you maintain architectural control.

When you need to optimize for cost, you adjust the routing rules. When regulations change, you modify the compliance logic. When a new model emerges, you integrate it without vendor approval. This is operational sovereignty.

Economic Sovereignty: Predictable, Transparent Cost Structures

What it is: Protection from unpredictable pricing changes, hidden costs, and vendor pricing power in AI infrastructure.

Why it matters: Between 2023 and 2025, enterprises globally saw:

• OpenAI API pricing changes of 10-50% across model versions

• Surprise rate limiting during high-demand periods

• Unanticipated egress costs when extracting data

• Volume discounting structures that disadvantaged smaller deployments

• Regional pricing disparities with no transparent justification

When your vendor controls pricing and you're locked into their API semantics, you have no negotiation leverage. Economic sovereignty is strategic sovereignty.

GLBNXT approach: Transparent pricing with predictable scaling costs. But more importantly, because they control the orchestration layer, they can:

• Route expensive queries to cost-effective open source models

• Implement intelligent caching to reduce redundant API calls

• Switch to alternative providers when pricing becomes unfavorable

• Optimize between self-hosted and API-based models based on volume economics

The orchestration layer gives pricing negotiation power. When you're not locked to a single vendor's API, that vendor can't unilaterally dictate terms.

Strategic Sovereignty: Long-Term Architectural Flexibility

What it is: The ability to evolve your AI architecture as technology, regulations, and business requirements change without being constrained by past vendor decisions.

Why it matters: AI is evolving faster than any technology in enterprise history. The models, frameworks, and best practices that define production systems today will be obsolete within 18 months.

Strategic sovereignty means you can adapt without migration projects, rewrites, or vendor negotiations. You architect for change from day one. What strategic sovereignty requires:

• No vendor lock-in: Your code doesn't depend on proprietary APIs

• Modular architecture: You can replace components without system rewrites

• Open standards: Your integrations work with any compliant provider

• Transparent dependencies: You know exactly what relies on what

GLBNXT approach: Modular platform design means you can use the entire stack or integrate individual components. Need GPU infrastructure? Use GLBNXT clusters (deployed globally or in specific regions). Have your own data center? Deploy GLBNXT orchestration on your Kubernetes anywhere in the world. Want to integrate specific tools?

Connect through open standards. Built on Kubernetes, Rancher, Hugging Face, Ollama, n8n, Supabase, Elasticsearch, and NVIDIA NIM, not proprietary frameworks that lock you into a single vendor's ecosystem. When your requirements change, our architecture can adapt.

Why Orchestration Is Where Sovereignty Actually Lives

McKinsey's 2025 State of AI research found that while 88% of organisations now use AI regularly, most remain stuck in experimentation or pilot phases, unable to achieve enterprise-level impact. The research identifies a critical gap: organisations that capture meaningful value aren't simply adding AI to existing workflows but rather re-architecting at the orchestration layer. "Companies capturing meaningful value are re-architecting workflows, decision points, and task ownership," the report emphasises, precisely the orchestration-layer sovereignty that distinguishes truly independent AI infrastructure from vendor-dependent implementations.

Here's the uncomfortable truth that emerged from production deployments: the vendor who controls your orchestration layer controls your AI strategy, regardless of where your data lives. Consider what the orchestration layer actually does:

Decides which model processes which request

Routes traffic based on cost, performance, and availability

Manages authentication, rate limiting, and access control

Collects telemetry and shapes your observability

Integrates with your data sources, applications, and workflows

Determines fallback logic when primary systems fail

If this layer is proprietary, closed-source, and vendor-controlled, then every architectural decision runs through your vendor's priorities, not yours.

Real-world scenario: Your application needs to process sensitive customer data through an LLM. Your data governance policy requires specific geographic processing. Your cost policy requires using the most economical model that meets quality thresholds.

Without orchestration sovereignty:

You're locked to your vendor's model selection

You can't implement dynamic cost optimisation

You can't inspect routing decisions or audit compliance

When your vendor raises prices, you have no leverage

With orchestration sovereignty (GLBNXT approach):

Your orchestration rules decide: "route to Mistral-Large for sensitive data, fall back to GPT-4 for complex reasoning, cache aggressively to minimize costs"

You audit every routing decision in your observability tools

When Mistral releases a better model, you update your config, no code changes

When economics shift, you adjust model selection without vendor negotiations

The orchestration layer is where control lives. Data sovereignty and model sovereignty are necessary, but orchestration sovereignty is where independence becomes operational.

The GLBNXT Architecture: Sovereignty Across All Five Dimensions

As the World Economic Forum notes in its analysis of digital sovereignty trends, sovereignty fundamentally means "the ability to have control over your own digital destiny, the data, hardware and software that you rely on and create." This definition transcends geography: whether your organisation operates in Europe, North America, APAC, or anywhere else, the question remains the same: do you control your AI infrastructure, or does your vendor control it for you? GLBNXT is purpose-built to deliver sovereignty across every dimension: data, models, operations, economics, and strategy, regardless of where you operate.

Sovereign Infrastructure with Global Flexibility

Deploy on GLBNXT-owned infrastructure (including GPU clusters and regional data centres for organisations requiring local residency) or run the entire stack on your own infrastructure anywhere in the world. Powered by Kubernetes and Rancher for proven, scalable orchestration.

Flexible deployment options:

GLBNXT provides three distinct infrastructure models, deployable independently or in hybrid configurations:

GLBNXT-Owned Infrastructure

Run on GLBNXT-managed GPU clusters and data centres with complete operational transparency. Full control over infrastructure operations, predictable performance, and direct accountability.Regional Sovereign Data Centers

Deploy on infrastructure in jurisdictions with strict data sovereignty protections, free from foreign government access laws. Examples include European data centres or Nordic facilities (outside US CLOUD Act jurisdiction), ensuring protection from extraterritorial surveillance.Hyperscaler Deployment

Deploy on AWS, Azure, or Google Cloud Platform in any global region. Leverage existing cloud investments while maintaining orchestration-layer sovereignty through GLBNXT's unified platform.Hybrid Configurations

Combine deployment models strategically: sensitive workloads on sovereign infrastructure, development environments on hyperscalers, production on GLBNXT-owned clusters, all orchestrated through a single control plane.

The architecture adapts to your data sovereignty requirements, whether that's protection from foreign surveillance laws, GDPR compliance, CCPA alignment, or industry-specific regulations, while delivering identical orchestration capabilities across all deployment models.

Model Independence Through Unified Orchestration

Integrate 100+ LLMs through a single interface, from open source models (Mistral, Llama, Falcon, Qwen, DeepSeek and many more) to commercial APIs (OpenAI, Anthropic, Cohere, Perplexity). Our application code remains stable. The underlying models can change based on your requirements. This isn't just multi-model support, it's true model independence. You write code or build solutions once and deploy against an abstraction layer you control.

Operational Control Through Open Standards

Built on Kubernetes, Rancher, Ollama, Hugging Face, n8n, OpenWebUI, Supabase, Elasticsearch, NVIDIA NIM and many more. Every component is either open source or built on open standards we can audit, modify, and extend. Our enterprise clients control the orchestration rules. You define routing logic. You own the integration patterns. The platform provides infrastructure and tooling, but architectural control remains with our clients.

Transparent Economics and Cost Optimization, because you control orchestration, you can implement intelligent cost strategies:

Route low-complexity queries to cost-effective open source models

Reserve expensive commercial models for high-value tasks

Implement caching and batching to reduce redundant API calls

Monitor cost per request and adjust strategies in real-time

Economic sovereignty means you optimize for your business model, not your vendor's pricing incentives.

Strategic Flexibility Through Modularity, use the full GLBNXT platform or integrate individual modules:

Infrastructure layer: GLBNXT-managed clusters (global regions) or your own infrastructure

Orchestration layer: MLOps, CI/CD, model deployment, monitoring

Knowledge layer: Vector search, knowledge graphs, data pipelines

Agent layer: No-code interfaces, automation, multiple app integrations

The modular architecture means you're never locked in. Start with one layer, expand as needed, and replace components as requirements evolve.

Practical Implications: The CTO's Sovereignty Checklist

If you're evaluating AI infrastructure for sovereignty, ask these questions:

Data Sovereignty

[ ] Can we deploy in the geographic regions our business requires?

[ ] Can we audit exactly where data is stored and processed at every step?

[ ] Are we compliant with applicable regulations (GDPR, CCPA, HIPAA, SOX, industry-specific requirements)?

[ ] Can we move data processing locations if requirements change?

Model Sovereignty

[ ] Can we switch models without rewriting application code?

[ ] Do we have access to both commercial APIs and open source models through the same interface?

[ ] What happens if our primary model provider changes pricing, deprecates a model, or exits the market?

[ ] Can we A/B test models in production without vendor approval?

Operational Sovereignty

[ ] Do we control the routing and orchestration logic, or is it proprietary to our vendor?

[ ] Can we audit and modify how AI workloads are executed?

[ ] Is the orchestration layer built on open standards we can inspect?

[ ] Do we have full observability into model selection, costs, and performance?

Economic Sovereignty

[ ] Can we implement cost optimization strategies independently of our vendor's incentives?

[ ] Are we protected from unilateral pricing changes?

[ ] Can we dynamically route between providers based on economics?

[ ] Do we have transparent, predictable infrastructure costs?

Strategic Sovereignty

[ ] Is our architecture modular enough to replace components without full system rewrites?

[ ] Are we locked into proprietary APIs, or using open standards?

[ ] Can we adapt as AI technology evolves without vendor dependency?

[ ] Do we have genuine optionality if requirements change?

If you answered "no" to any of these, you don't have sovereignty, you have compliance theater.

Conclusion: Sovereignty by Design, Not by Declaration

Data residency was the first step. Open source models were the second. But true AI sovereignty lives in the orchestration layer, where routing decisions are made, costs are controlled, and architectural flexibility is preserved.

GLBNXT provides sovereignty across all dimensions for enterprises worldwide:

Infrastructure sovereignty through flexible deployment (GLBNXT-managed infrastructure globally, or your own infrastructure anywhere)

Model sovereignty through integration with 100+ LLMs via unified orchestration

Operational sovereignty through open standards and transparent control

Economic sovereignty through intelligent cost optimization you define

Strategic sovereignty through modular architecture and zero vendor lock-in

Most AI platforms offer compliance in select regions. GLBNXT delivers control everywhere. From prototype to enterprise-scale deployment, 50% faster, fully modular, and architecturally independent. Built on open-source foundations, deployable globally, orchestrated under your rules.

Sovereignty isn't a compliance checkbox. It's an architectural property. And it begins at the orchestration layer.

https://www.gartner.com/en/articles/ai-human-readiness

https://technologymagazine.com/news/gartner-agentic-ai-to-define-next-wave-of-enterprise-roi

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

https://www.weforum.org/stories/2025/01/europe-digital-sovereignty/

Discover how GLBNXT can transform your operations. Visit www.glbnxt.com to learn more.

© 2025 GLBNXT B.V. All rights reserved. Unauthorized use or duplication prohibited.